The idea of machines that can build even better machines sounds like sci-fi, but the concept is becoming a reality as companies like Cadence tap into generative AI to design and validate next-gen processors that also use AI.

In the early days of integrated circuits, chips were designed by hand. In the more than half a century since then, semiconductors have grown so complex and their physical features so small that it’s only possible to design chips using other chips. Cadence is one of several electronic design automation (EDA) vendors building software for this purpose.

Even with this software, the process of designing chips remains time-consuming and error-prone. But with the rise of generative AI, Cadence and others have begun exploring new ways to automate these processes.

I remember an experiment in the late 90s where they had “AI” from then (fuzzy logic and some other tech) design EPROM chips that had to generate a very specific frequency, or something like that.

They ended up with 20 chips, and 20 different designs. They all worked, but…

Each one was programmed in a way that worked on that chip, and that chip alone. Copy the programming from one chip to another, and it would not work

Some chips had redundant circuits that connected to nothing, just sitting there. When those circuits were removed, the chip would fail too, even though those circuits in principe didn’t seem to do anything as, again, they weren’t connected to anything

Not a single one had an actual sense making correct solution

Basically the system just kept tweaking each chip until it guy something that worked

Current LLM’s are more powerful but still operate in many similar ways. You can’t ever trust them output so you’ll have to check everything it does manually to get any remote kind of trust in the system, but how do you even go to test whatever random crap comes out on an AI chip?

Can you imagine trying to debug that? I’d rather pull my own nails out.

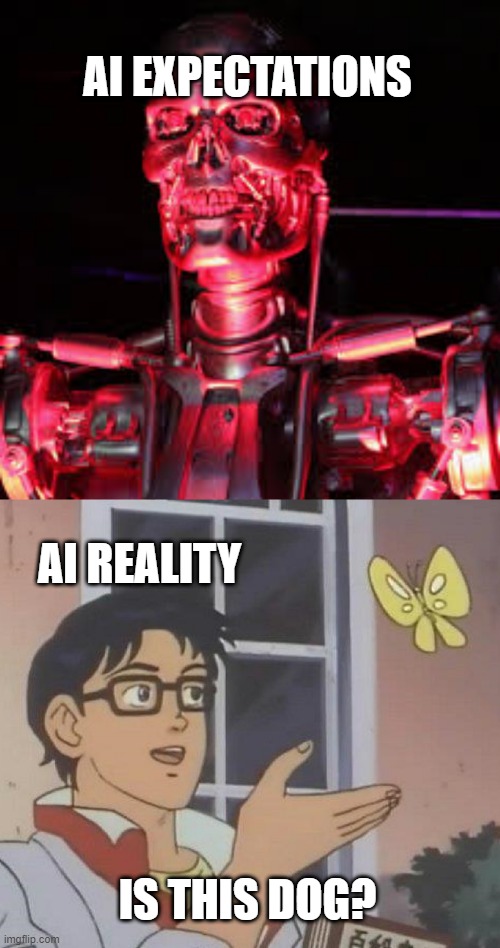

Here’s a clear description of the image at that URL:

It’s a two-panel meme. The top panel shows a shiny, red-lit robot skeleton reminiscent of the Terminator with bold white text above it that says “AI EXPECTATIONS.” Below it, the second panel uses the classic “Is this a pigeon?” anime still (a character gesturing toward a butterfly) with overlaid text reading “AI REALITY” above and “IS THIS DOG?” below. The joke contrasts lofty, futuristic expectations of artificial intelligence with a humorous reality of AI misidentifying a butterfly as a dog.

AI is getting better all the time. Eventually it will surpass human intelligence, but that seems decades away. Some challenges that remain are:

- Untrustworthy proponents, who even if they were telling the truth NOW, that fact would be inconsistent with their past lies.

- Energy utilization costs

- Unethical training practices, note how like each of the above this is independent of its actual capabilities

- While we are at it, how many “AI” answers in the past have been curated with manual effort, or even are due more (sometimes exclusively?) to human answers placed into the area where the supposedly, and falsely reported, answers from “AI” were supposed to have been. I concede this may be a shrinking pool of answers (yet for all we know it could even be a growing one? as in waves of propagation where answers that were previously impossible via AI, become possible, but then there are even more answers beyond that that remain impossible, until AI again catches up to those, and the wave moves forward).

- Reliability - as in how many answers are worth paying attention to vs. must be discarded (remember those people who trusted the AI answer and thereby had to be evacuated via boat due to the rising tides making a crossing impossible?), and more importantly there a lack of distinction between which answers are which. Even Sam Altman says this, so you don’t have to take my word for anything here.

- The culture of constant blaming of people for believing the false narratives spread by AI proponents - like you should have just used a better prompt, bro! This happened with Windows too, for decades, as in “you should have just spent 50 hours turning off this list of 100 things that are super annoying and replaced core components of the OS, and then do a large subset of that again and again after every update process… bro!” At some point though, when can we say that “Windoze sux”? Or Reddit? Yes Reddit has technical superiority to Lemmy in many ways, plus there is tons of content there that while it may be elsewhere too (Facebook, Instagram, X) is not on the Fediverse, and in some cases is nowhere else. Yet… we all are here? (And some of us also there too.)

- The profit incentive makes people distrust anything coming from the source that you linked to. Facebook, Reddit, X, Google, Microsoft, Apple, etc. all enshittified, and just because chatgpt has not enshittified YET, does not mean that it will not in the future. I would not have said this as strongly here if you had linked to an ethically-sourced, non-profit or even FOSS source (something running DeepSeek?).

It is true that “AI is getting better”, and it is also true that “A’I’ sucks”, both. And many of us do not spend 10 hours a week keeping up with which of the large (but shrinking?) variety of things A"I" cannot be trusted with vs. where it might be halfway useful (if it does not lead to your early untimely demise, e.g. in interpreting medical advice). Like I would use it to retrieve a link for me to read (& VERIFY!!!) information, but I would NOT trust it to interpret an image for me. It happened to work successfully here, but this is a mere party trick? Beware, any company that is looking to fire all of its staff and rely instead purely on AI - it is not ready for anything close to what the promises claim that it is ready for.

Eventually it will surpass human intelligence, but that seems decades away

Meanwhile, one of yesterday’s headlines is about Google’s latest AI system Aletheia having autonomously solved various math theorems that humans haven’t been able to crack.

I think this might be coming faster than you think.

Measures of intelligence are all iffy at best, but I’m pretty sure “being better at raw math” isn’t a good one in isolation, especially seeing as that has been the case for a very long time.

CPU’s and GPU’s are basically just doing really fast math repeatedly.

That aside i’d, challenge you to find a universally accepted definition of “human intelligence” that works as a benchmark we can also use to measure machine intelligence.

afaik, we’re still murky on whether or not we are just really efficient specialised computers working with electric meat instead of electric stone.

The term normally used when talking about MI that is similar enough to human intelligence is AGI and even then, there’s not consensus on what that actually means.

This sounds like the AI effect at work. Google’s got an AI that’s autonomously generating novel publishable scientific results and now that’s dismissed as them being just “good at math.”

The term normally used when talking about MI that is similar enough to human intelligence is AGI and even then, there’s not consensus on what that actually means.

The root article that this thread is about isn’t about AGI at all, though. It’s about an AI that’s doing computer chip design.

This sounds like the AI effect at work. Google’s got an AI that’s autonomously generating novel publishable scientific results and now that’s dismissed as them being just “good at math.”

I can see why it might seem that way from the small reply i gave, but contextually it was in response to you referencing a maths specific problem.

I also went out of my way to specifically raise the same points as in that link, wrt to “intelligence” measurements and definitions.

I wasn’t advocating for one way or the other, just pointing out that (afaik) we don’t currently have a good way of defining or measuring either kind of intelligence, let alone a way to compare them [*].

So timelines on when one will surpass the other by any objective measurements are moot.

[*] Comparisons on isolated tasks is possible and useful in some contexts,but not useful in a general measurement sense without an actual idea of what we should be measuring.

As in, you can measure which vehicle is heavier, but in a context of “Which of these is more red” , weight means nothing.

The root article that this thread is about isn’t about AGI at all, though. It’s about an AI that’s doing computer chip design.

You yourself quoted a response with the phrase “human intelligence” in an ML based context.

I was clearly replying to your comment and not the article itself.

deleted by creator

It might at that. Though there will also be a lag time where even after it comes, people have become so inured by the past lies that they are slow to adapt. And hallucinations still exist, especially in the cheaper models where significantly fewer than 10^8 (or was that 2^8?) compute cycles are expended to answer the equivalent of a random Google search query.

It would also help if humans were precise. General “AI” in the sense of movies (such as the one I showed a picture to, in the first panel) do not exist. But LLMs do.

have begun exploring new ways

This is the salient part. Meaning fuck all. But I’m sure they’ll try to unleash it on customers way too early as always with AI.

It should be restricted to science labs for at least another decade.

sounds… reasonable? >~<

In the realm of advanced chip design, you need deterministic algorithms to validate chip design. Literally any amount of hallucination in that process is going to result in an unbelievable amount of wasted resources, because setting up a chip fab for a particular design is mind-rendingly complex. You have to worry about things like how to etch features in silicon that are smaller by an order of magnitude than the wavelength of the light that you’re using to etch the features. And that’s just one of the insanely difficult problems that make the process so difficult to make reliable. You know those stats you see about poor chip yields? That and problems like it are the source - and that’s without accounting for design errors, which, while generally far less common, are far from unheard of (coughINTELcough).

This is what I was thinking (but you seem way smarter than me) the design of a chip is about as close to pure math as a physical object can get so in order to validate it we’re going to use software that’s just math but worse?

In theory… In practice is another story.